Global Bidirectional Remote Haptic Live Entertainment by Virtual Beings

This project demonstrates global Bidirectional

remote haptic live in real-time.

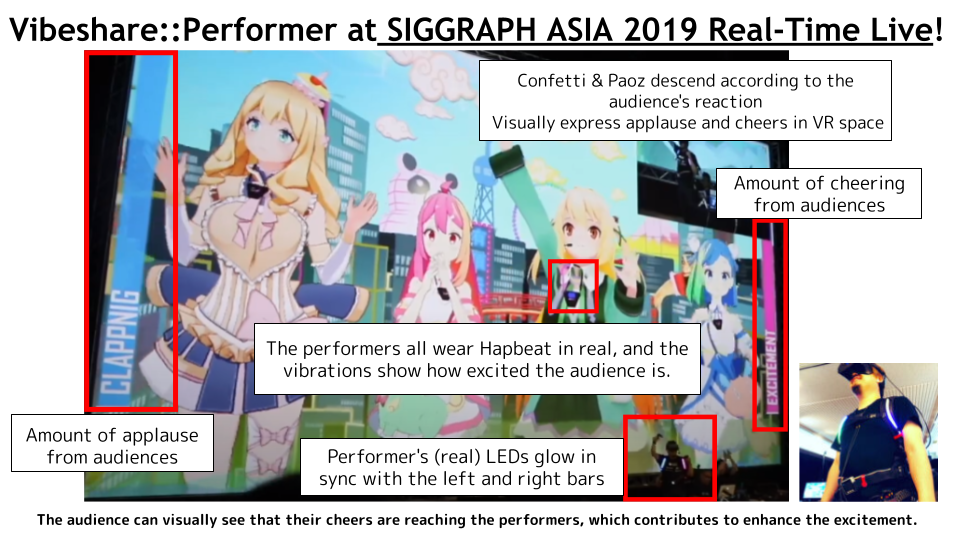

Real-Time Live audiences can make confetti and gifts

on the stage by your applause and laughing!

Players interaction are shared by “Hapbeat” device.

Haptics condition is visualized in Virtual Cast.

The stimulus is also visualized by LEDs on actors.

We will play non-verbal engagement

between Tokyo and Brisbane virtually.

Shooting game between Tokyo and Brisbane.

Give us your laugh and claps, if we could hit the apple!

Technical Overview

This technical demonstration shows the next generation of virtual reality live entertainment using global cloud network and haptic displays for VTubers. Performers will play 3D real time characters in REALITY Studio Tokyo and interact with SIGGRAPH ASIA audiences in Brisbane Australia. They can see and feel audiences’ applause and cheers via haptics displays. The emotional information will be displayed in the show for various audiences. The scenario will talk about future possibilities of Virtual Beings in real-time graphics and interaction thanks to haptic feedback, emotion analyzing, real-time translation and subtitling. It aims to 5G, next generation of network live entertainment using global bidirectional remote network and haptic displays for VTubers. SIGGRAPH is special interest group for computer graphics and interactive techniques. Most audience will had a great experiences in graphics but this demo is tackling to solve any barriers in distance, language, culture, audio/visual disabilities for the next gen augmented human beings. Performers will play 3D real-time characters. The remote players make harmonize audiences’ applause and cheers on site. The audience emotion will be visualized for various audiences which includes audio and/or visual disabilities. The pipeline which includes haptics feedback, analyzing, compatibility, stability are based on current cloud network services and edge computing using mobile PC and smartphones. We will open two YouTube live streaming for both side, and haptics information will be send via audio embedded channel. The live demo is also support audio/visual disabilities with Hapbeat device with a common smartphone device but interactive real-time visualization of audiences’ emotion which includes face, applause and cheering will be a key figure. Real-time subtitling (based on Windows Azure + Google Translation + Pre-made scenario) may solve a language barrier in SIGGRAPH audiences.

Teaser

Archive

Related Solution

Credit

Contributor:

GREE VR Studio Lab – Akihiko Shirai, Yusuke Yamazaki

EXR, Inc. – Kensuke Koike

NTT DOCOMO, Inc. – Yoshitaka Soejima

Cast:

Kilin : Akina Homoto

Xi:Emiri Iwai

MilkyQueen – Mai Princess:Inori R Oguruma

Lea IMAI : Akihiko Shirai

Music:

“520”/ Tacitly – (C)NTTDOCOMO,INC. (C)MIGU.,Ltd

Special Thanks:

Just Pro – Ayumi Mino

Virtual Cast, Inc.

Hapbeat LLC.

WFLE, Inc. – all “REALITY” team

(C) GREE, Inc.

Related Events in SIGGRAPH

- SIGGRAPH 2020 Birds of a Feather: Virtual Beings World “New Play Together”

- SIGGRAPH ASIA 2019 Real-Time Live! Global Bidirectional Remote Haptic Live Entertainment by Virtual Beings

- Virtual Beings World in SIGGRAPH 2019

- SIGGRAPH ASIA 2018 Real-Time Live!